The Importance of Participants in the Foreign Exchange Market | Explained

The Role of Participants in the Foreign Exchange Market The foreign exchange market, also known as the forex market, is the largest financial market …

Read Article

Binary code is the foundation of all digital systems, and understanding its rules is essential for anyone working in the field of computer science. Whether you’re a beginner or an experienced programmer, this comprehensive guide will walk you through the four fundamental rules of binary and help you gain a solid understanding of how it works.

Rule 1: Binary Digits

The first rule of binary is that every piece of information is represented using only two digits: 0 and 1. These digits are commonly referred to as “bits” - short for binary digits. Each bit represents a state: 0 for off and 1 for on. By combining these bits, we can represent any piece of information, from numbers to letters to images.

Rule 2: Binary Arithmetic

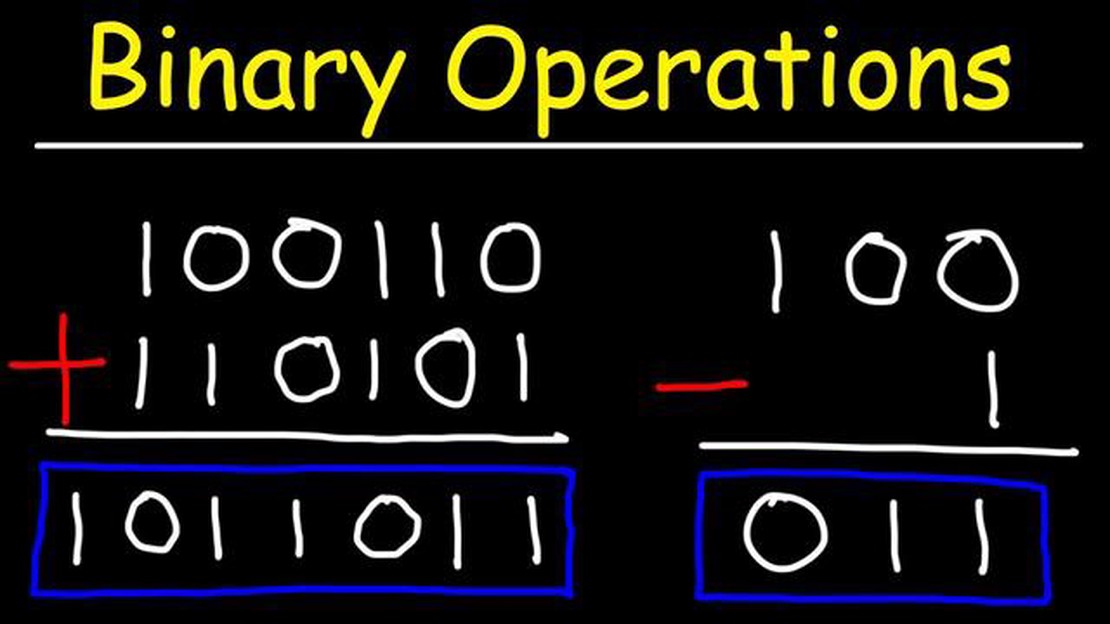

The second rule of binary is the fundamental arithmetic operations that can be performed with binary numbers. Just like in the decimal system, binary numbers can be added, subtracted, multiplied, and divided. The key difference is that binary arithmetic operates on a base of 2 instead of 10. This can often lead to simpler calculations and faster processing in digital systems.

Rule 3: Binary Conversion

The third rule of binary is the ability to convert between binary and decimal numbers. While binary is the language of computers, humans are more familiar with the decimal system. Understanding how to convert between the two can be very useful for troubleshooting and debugging in computer systems.

Rule 4: Binary Logic

The fourth rule of binary is binary logic, which forms the basis of computer operations. Binary logic is all about combining and manipulating bits using logic gates, such as AND, OR, and NOT gates. These gates allow us to perform operations like boolean algebra, which is essential for computer programming and designing digital systems.

By following these four rules of binary, you’ll be equipped with the knowledge and skills needed to work with digital systems and understand the inner workings of computers. Whether you’re a programmer, a computer engineer, or simply someone interested in learning about the world of binary, this comprehensive guide will serve as your roadmap to mastering this fundamental concept.

Binary is a numeric system that uses only two digits, 0 and 1. It is the foundation of all digital systems and plays a crucial role in computer programming and data representation. Understanding the basics of binary is essential for anyone interested in the field of technology.

Read Also: Discovering the Most Accurate Calendar: A Comparative Analysis

In decimal system, we use a base of 10, which means we have 10 digits to represent different values: 0, 1, 2, 3, 4, 5, 6, 7, 8, and 9. In binary, however, the base is 2, so we only have two digits to work with: 0 and 1.

Each digit in a binary number is called a “bit,” which stands for binary digit. Bits are used to represent and store information in computers. A group of 8 bits is called a “byte,” which is the basic unit of storage in most computer systems.

Binary numbers can be used to represent any type of data, including text, images, and sound. However, since binary numbers can become very long and difficult to work with, a shorthand notation called “hexadecimal” is often used. Hexadecimal uses a base of 16 and uses the digits 0-9 and the letters A-F to represent values from 0 to 15.

Converting decimal numbers to binary is a simple process of repeatedly dividing the decimal number by 2 and recording the remainders. The binary representation is the sequence of remainders read in reverse order. For example, the decimal number 10 is represented as 1010 in binary, because 10 divided by 2 is 5 with a remainder of 0, and 5 divided by 2 is 2 with a remainder of 1, and so on.

Binary operations such as addition, subtraction, and multiplication can also be performed using specific rules and algorithms. These operations are vital in computer programming and digital logic design.

Overall, understanding binary is crucial for anyone working with computers or technology. It forms the basis of how information is stored and processed, and it allows us to create and manipulate a wide range of digital data.

Binary is the foundation of modern computing and is essential for anyone working in the field of technology. Without a solid understanding of binary, it is impossible to fully grasp how computers work and how data is stored and processed.

Binary is a numerical system that uses only two digits, 0 and 1, to represent all data. It is the basis for all computer languages and is used to represent everything from numbers and text to images and videos. When you press a key on your keyboard, send a text message, or open a program, binary is at work behind the scenes.

Read Also: Where to Find the Best Forex News: Top Sources and Websites

Understanding binary allows you to communicate with computers and write code that they can understand. It enables you to manipulate and transform data, perform complex calculations, and create algorithms that solve real-world problems.

Additionally, binary is the language of computer hardware. Inside a computer, data is stored in binary format, and the central processing unit (CPU) works with binary instructions to perform tasks. Without a solid understanding of binary, it is difficult to troubleshoot hardware issues or optimize performance.

Moreover, understanding binary opens up a world of opportunities for learning and career development. Many high-paying jobs in computer science, software development, and cybersecurity require a deep understanding of binary and its applications. By mastering binary, you can enhance your technical skills and unlock a wide range of career opportunities.

In conclusion, understanding binary is critical for anyone working in the field of technology. It is the language of modern computing, and without it, you cannot fully comprehend how computers work or write code that computers can understand. By investing time and effort into learning binary, you can gain a competitive edge in the tech industry and open up doors to exciting and lucrative career prospects.

Binary is a numerical system that uses only two digits, 0 and 1, to represent all numbers and information. It is important because computers use binary as their primary language, allowing them to process and store information.

The four rules of binary are addition, subtraction, multiplication, and division. These rules are used to perform mathematical operations using the binary system.

To add numbers in binary, you start from the rightmost digit and work your way towards the left. If the sum of two digits is less than 2, you write that sum in the result. If the sum is equal to or greater than 2, you write the remainder and carry over the extra digit to the left.

To convert a binary number to decimal, you assign each digit a place value (starting from the rightmost digit) - 1 for the rightmost digit, 2 for the next digit, 4 for the next digit, and so on. Then, you multiply each digit by its corresponding place value and sum up the results.

The four rules of binary are addition, subtraction, multiplication, and division.

The Role of Participants in the Foreign Exchange Market The foreign exchange market, also known as the forex market, is the largest financial market …

Read ArticleCanadian Dollar Exchange Rate Today: Selling Price Are you planning a trip to Canada or looking to exchange some Canadian dollars? It’s crucial to …

Read Article10 pip strategy in forex trading Forex trading is a complex and dynamic market, where traders aim to capitalize on the fluctuations in currency …

Read ArticleWhat is the exponentially weighted moving average? The exponentially weighted moving average (EWMA) is a statistical method used to calculate the …

Read ArticleLocation of the FX Summit 2024 Welcome to the official website of the FX Summit in 2024! Are you curious to know where this prestigious event will be …

Read ArticleWhat is the Nasdaq 100 day moving average? Investing in the stock market can be a daunting task, especially for those who are new to the world of …

Read Article