Disadvantages of the MA model: Evaluating the Limitations and Drawbacks

Disadvantages of the MA Model The MA model, or Moving Average model, is a commonly used forecasting method in econometrics. It is widely used in …

Read Article

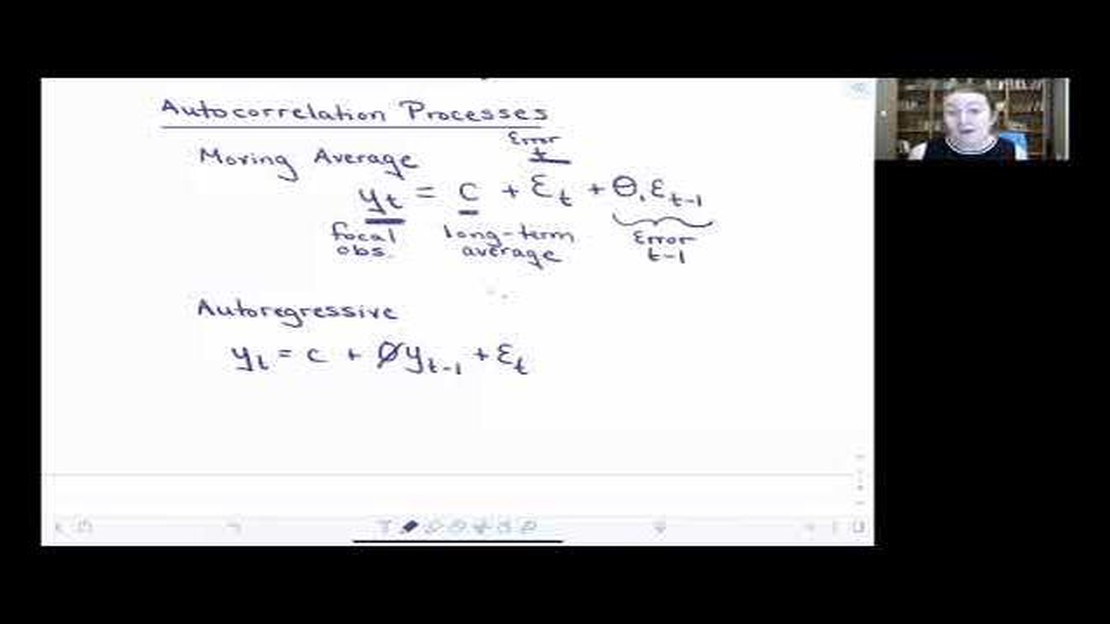

Autoregressive and Moving Average models are two common time series models used in statistics and econometrics. While they both describe patterns and dependencies in time series data, they have distinct characteristics and are used in different contexts.

An autoregressive (AR) model is a type of time series model where the current value of a variable is modeled as a linear combination of its past values. In other words, an AR model assumes that the future values of a variable can be predicted based on its own past values. This model is useful for understanding and forecasting processes with a clear trend or pattern over time.

On the other hand, a moving average (MA) model is a type of time series model where the current value of a variable is modeled as a linear combination of past error terms. In other words, an MA model assumes that the future values of a variable can be predicted based on its past prediction errors. This model is useful for understanding and forecasting processes with random fluctuations and noise.

One key distinction between AR and MA models is the nature of the dependencies they capture. An AR model captures linear dependencies within the time series itself, while an MA model captures linear dependencies between the time series and the prediction errors. Additionally, AR models are commonly used when the data exhibits a trend or systematic pattern, while MA models are commonly used when the data has random fluctuations or noise that affect its future values.

Understanding the distinction between autoregressive and moving average models is essential for accurately modeling and forecasting time series data. By recognizing the underlying patterns and dependencies in the data, researchers and analysts can choose the appropriate model to analyze and predict future values, leading to more accurate and reliable forecasts.

An autoregressive (AR) model is a statistical model that is used to analyze time series data. It is based on the idea that the value of a variable at any given time is determined by its own past values, hence the name “autoregressive”. AR models are widely used in various fields, including economics, finance, and engineering, to forecast future values and understand the underlying patterns in the data.

The main concept behind an AR model is that the current value of a variable is a linear combination of its previous values, with each previous value being multiplied by a corresponding coefficient. The number of previous values considered in the model is denoted by the parameter “p”. For example, an AR(1) model uses only the immediate previous value, while an AR(2) model uses the two immediate previous values.

Mathematically, an AR(p) model can be represented as:

| AR(1) | AR(2) | AR(p) |

|---|---|---|

| X(t) = c + φ1X(t-1) + ε(t) | X(t) = c + φ1X(t-1) + φ2X(t-2) + ε(t) | X(t) = c + φ1X(t-1) + φ2X(t-2) + … + φpX(t-p) + ε(t) |

Where:

Estimating the coefficients of an AR model involves using various techniques, such as the method of least squares or maximum likelihood estimation. Once the coefficients are estimated, the model can be used to make predictions for future values based on the current and previous values of the variable.

Read Also: Discover the Most Profitable Forex Pairs for Your Trades

AR models can be useful in analyzing and forecasting time series data, as they capture the temporal dependencies and patterns present in the data. They are particularly effective when the data exhibits a degree of persistence or correlation between adjacent values. By understanding the basics of autoregressive models, researchers and analysts can gain valuable insights into the behavior and future trends of the underlying data.

An autoregressive (AR) model is a type of time series model that represents the future values of a variable as a linear combination of its past values. In other words, it assumes that the value of the variable at any given time point is a function of its previous values.

AR models are characterized by two main components: the order p and the coefficients φ. The order p represents the number of lagged values used to predict the current value, while the coefficients φ represent the weights assigned to each lagged value.

Mathematically, an AR(p) model can be represented as:

Read Also: Discover the Various Types of Stock Traders and Their Strategies

Xt = c + φ1Xt-1 + φ2Xt-2 + … + φpXt-p + εt

Where Xt represents the value of the variable at time t, c is the constant term, φ1 to φp are the coefficients, Xt-1 to Xt-p are the lagged values, and εt is the error term.

The coefficients φ1 to φp determine the strength and direction of the relationship between the past and future values. If all coefficients are zero, the model reduces to a constant term c. If all coefficients are positive and less than one, the model represents a stationary process with diminishing effects of the past values.

AR models are widely used in various fields, such as finance, economics, and meteorology, to forecast future values based on historical data. They are particularly useful when the data exhibits autocorrelation, meaning that the current value is dependent on its past values.

Autoregressive models rely on the past values of the time series to forecast future values, while moving average models use previous forecast errors to predict future values.

Autoregressive models are calculated using the past values of the time series, typically using a regression model. Moving average models, on the other hand, are calculated using the forecast errors from previous predictions.

Both autoregressive and moving average models can be used for forecasting stock prices, but autoregressive models may be more suitable as they take into account the past values of the stock prices and any trends or patterns.

No, autoregressive and moving average models are commonly used in time series analysis, but they can also be applied to other areas such as economics, finance, and engineering to forecast future values based on past data.

Autoregressive models can be limited by their sensitivity to outliers and the need for a large amount of historical data. Moving average models can be limited by their inability to capture long-term trends and the potential for overfitting if too many parameters are used.

Autoregressive (AR) models predict future values based on past observations, while moving average (MA) models predict future values based on past forecast errors. AR models use only the past observations of the variable being modeled, while MA models use the past forecast errors.

Disadvantages of the MA Model The MA model, or Moving Average model, is a commonly used forecasting method in econometrics. It is widely used in …

Read ArticleBest Time Frames for Scalpers Scalping is a popular trading strategy used by many traders in the Forex and stock markets. It involves taking advantage …

Read ArticleCreating a Basic Trading Strategy: Step-by-Step Guide Trading in the financial markets can be a complex and daunting task, especially for beginners. …

Read ArticleMinimizing Tax Liability on Trading Transactions Trading can be a lucrative venture, but it’s important to understand the tax implications that come …

Read ArticleDoes City Index accept US clients? City Index is a well-known brokerage firm that offers a wide range of trading services to clients around the world. …

Read ArticleExploring the Most Popular Alternative Investment Options Are you tired of the traditional investment options and looking for something different? …

Read Article